The topic of drive fragmentation might be a little out in this days, but since I spent great deal of my youth watching PC Tools defragment my drive in a graphically pleasing fashion, I am inclined to think that drive fragmentation (when excessive) can severely reduce both computer performance and hard drive life.

As this might be true for the common day-to-day user, it is particularly true for corporate/enterprises that do need their data to be:

- accessible,

- quickly accessible,

- accessible for a long time

In a common computer use scenario, most of the files are there for computer to read an use, either as software that has to be loaded into memory, or documents that have to be shown to the user. Writing to the hard drive is uncommon operation (when you put it against the number of reads) and thus the drive fragmentation however present is in fact easily ignored.

Continuous stream recording, enter…

In my business (my clients businesses’ to be exact) the hard drives are working in opposite. They WRITE all the time, and read only on occasions. And the problem that will surely lead to fragmentation is that in most situations they need to write MULTIPLE long files continuously. adidas 2017 pas cher Let me try to explain what, first from the aspect of why, then move to what…

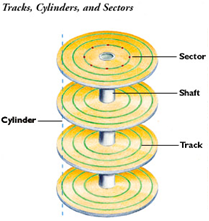

When either running VideoPhill Recorder for recording video, or using StreamSink to record internet media streams, in most cases user has MULTIPLE channels recorded on one computer. Files that are created by that recording are commonly created at one time (all of them) and are grown continuously until closed. Since Windows is, as it is now, an operating system that can’t reserve drive space in advance (maybe it can, but software doesn’t know how long the files would be) the space for them will be allocated as the time goes by. If we have 4 files that are written slowly but concurrently (and are grown at the same time), we’ll certainly have the following situation on the hard drive (I’m talking ONLY about the data that is stored here, and am simplifying physical hard drive storage as a continuous slate):

file1_block1

file2_block1

file3_block1

file4_block1

file1_block2

file2_block2

file3_block2

file4_block2

.

.

.

file1_blockN

file2_blockN

file3_blockN

file4_blockN

That means fragmentation. fjallraven kanken soldes File isn’t in continuous blocks, but is scattered in evenly and can’t be read sequentially from the hard drive. fjallraven kanken rugzakken You might be lucky and your blocks could be scattered in a way that sectors on the drive will be adjacent and this won’t pose a problem, but what are the chances? ![]()

And when file1 gets deleted, what remains on the hard drive? A blocks filled with nothing, left there for other files to fill them. New files will try to fill them, and the drive will soon be completely jumbled. It will all be hidden from you by the OS, but still, OS will have to deal with it.

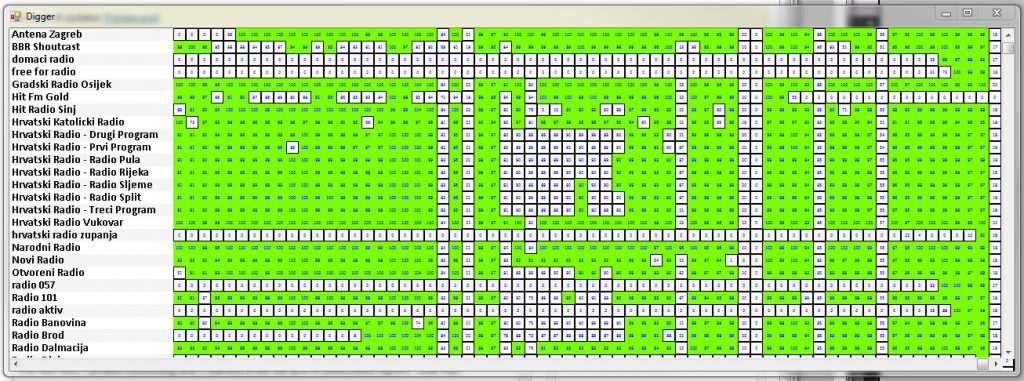

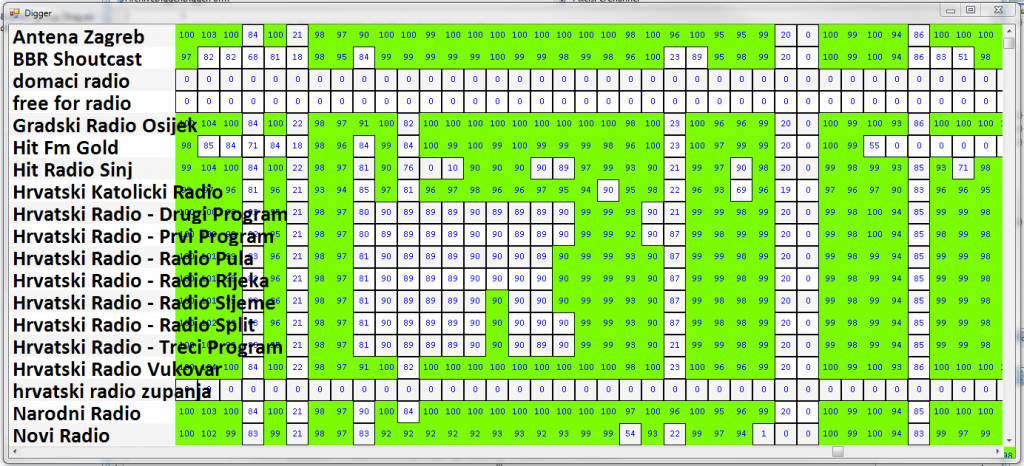

And that is the story of 4 channels. What about situation when you have 60 channels recorded on one machine (I’m talking about internet stream recording, of course). Such an archive could be found here: http://access.streamsink.com/archive/

If you aren’t convinced that this really IS a problem, you can stop reading now.

Rescue #1 – Drive Partitioning

It is feasible in situations where there is low number of channels that needs to be recorded. If you have 4 channels, you’ll create 4 partitions, and each partition will have nice continuous files written to it. Done.

However, you can’t have 50 partitions on one drive and get away with it.

Rescue #2 – Queued File Moving

Other solution for large number of channels presents itself in a form of a temporary partition for initial file recording, and then moving out the files to their permanent location later, but ONE FILE at a time, in a queue.

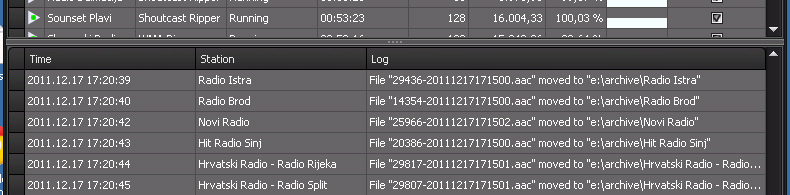

This is implemented in StreamSink, and it even has an ability to throttle data rate when moving the files to another drive. adidas schoenen Only thing that is of a problem here is wasting of a temporary hard drive, because it gets beaten by fragmentation.

Rescue #3 – Using RAM Drive on Method #2

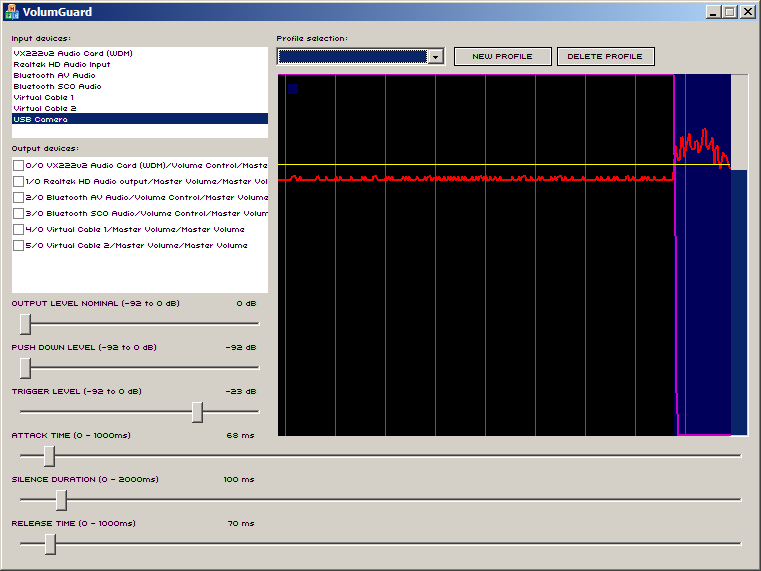

While I was writing the article about NAS, thought flashed across my mind – can we avoid writing to the temporary drive and reduce the load ONCE more?

Yes, we can. Mochilas Kanken No.2 I know that RAM Drives are also out of fashion, but here one will come handy. It’s the shame that support for it isn’t included in the system already, so with little googling I found this: http://www.ltr-data.se/opencode.html/#ImDisk

I installed it on the testing server, re-configured the application to use new temporary folder, and from now on, it runs so smooth I can’t hear it anymore ![]()

Some technical stuff:

- in this instance, I am currently recording 62 channels and cumulative rate for it is around 5 megabit/second

- my files have duration of 5 minutes, which means that recorded chunks are closed and moved to permanent storage every 5 minutes

- during those 5 minutes, each file will grow so much that the whole content for those 5 minutes won’t get over 200megabytes

- I created 512 megabyte ram drive, just to be safe

Conclusion

Take care of your hard drive, and don’t dismiss old-techs such as RAM Drives just yet.

If I was about to implement this on an application level, I would have to spend a great deal of time, and some media types won’t even be possible to implement – Windows Media for example, writes to disk or to other places if you employ magic…